“New York Life all said they’ve never hired prompt engineers, but instead found that—to the extent better prompting skills are needed—it was an expertise that all existing employees could be trained on.”

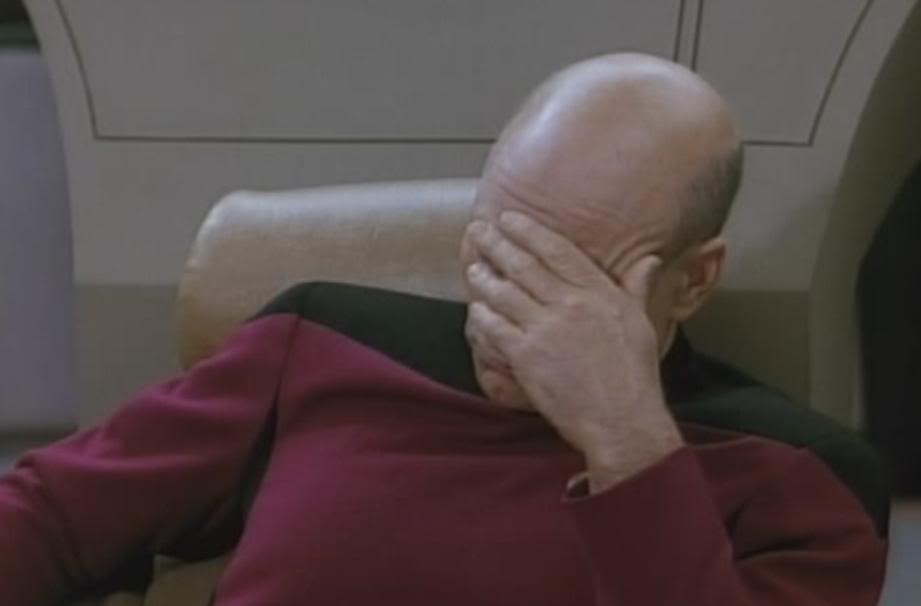

Are you telling me that the jobs invented to support a bullshit technology that lies are themselves ALSO bullshit lies?

How could this happen??

screw NewYorkLife, but LLM’s are definitely not bullshit technology. Some amount of skill in so-called ‘prompt-engineering’ makes a huge difference in using LLMs as the tool that they are. I think the big mistake people are making is using it like a search engine. I use it all the time (in a scientific field) but never in a capacity where it can ‘lie’ to me. It’s a very effective ‘assistant’ in both [simple] coding tasks and data analysis/management.

The gap between expected behavior and behavior is narrowing each iteration, plus people are starting to understand the limitations a bit better. The things AI does well you’re talking about are being parceled off as AI Agents for monetization and don’t require additional staff to oversee, they’re turnkey solutions.

The headline here is that AI is costing us jobs but not replacing them. And if you’re concerned that AI is a bubble, imagine what that’ll mean when it blows and these companies start faltering and being purchased. This is all mindless disruption with no foresight.

prompt engineer

They were paying people to fucking ask it questions? A professional Google searcher?

To be fair, there are tricks to it just like there are tricks for getting better Google results. But “prompt engineering” isn’t a fucking career.

It is evidence of a leadership team that is just clueless.

exactly this - SEO (search engine optimization) is huge, just like “prompt engineering” is extremely valuable - and its quite different from SEO. I wouldnt think either is a full-time position but, but learning to effectively prompt and use LLM’s is definitely a skill.

In my experience SEO is largely bullshit too and the rest is so simple you could summarize it on maybe two regular pages of paper and actually documented on pages that the search engines publish themselves (stuff like duplicate content, stable URLs, which status codes to use when,…).

Former SEO here.

Content management side of SEO is sort of bullshit, yes. However, I saved an entire website from complete deindexing because I was able to determine Google was rendering the page differently than a user and all Google saw was a giant blank overlay because of the way the cookie privacy was implemented. Ain’t no web developers that I know who are looking into that shit!

Also, figuring out sitewide implementation of pages and usability is big. Basically, technical SEO is a big damn deal and it can go hand in hand with general content creation.

I agree on both counts - honestly, a lot of companies are probably just posting job openings (that will purposefully remain vacant) with titles like AI Prompt Engineer and SEO Specialist to help boost shareholder confidence. I think I’m just fighting against the idea that LLMs should be used like a search engine - i know you didnt suggest that but I’ve been reading a lot recently about ‘ChatGPT lies!’ when in reality people are wrongly using a pattern recognition system like its a search engine.

I’m in IT. A lot of my job is Googling the answer, but I have to know what to ask and sift through what to look for that most employees won’t know.

A photographer will know what to input better than the average Joe to get a better photographic image out of ChatGPT by giving F-stops/aperture, shutter speed, ISO, lenses, bokeh/depth of field, rule of thirds, etc.

But yes… we’re getting closer and closer to George Jetson’s job of pushing one button and calling it a day.

Yay!

No shit.

Pretty sure lots of kids writing a paper for school are “prompt engineers”.